What Problem Does GEPA Solve?

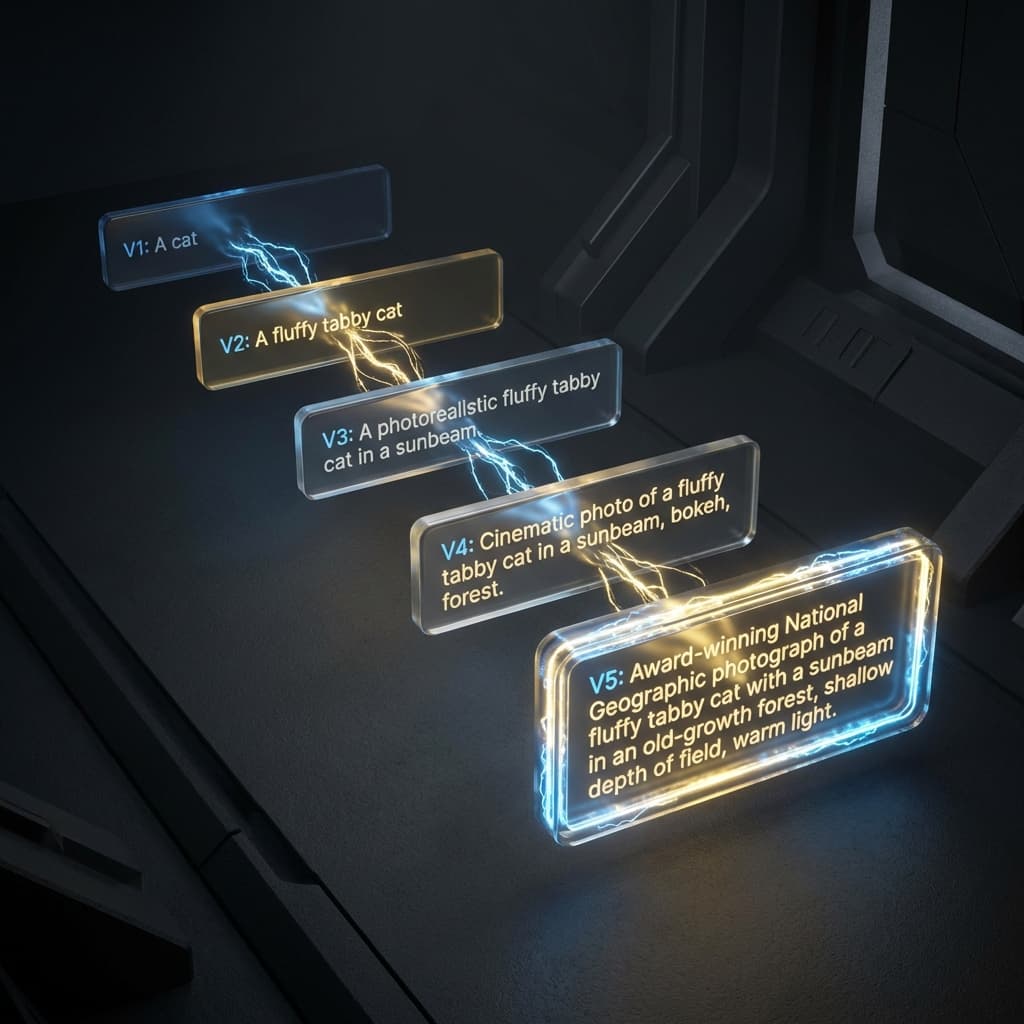

Imagine you're writing a prompt for an AI to answer math questions. Your first attempt might be: "Solve this math problem: {question}"

This works okay, but not great. So you try: "You are a math expert. Think step by step. Solve: {question}"

Better! But still not perfect. You keep tweaking, testing, tweaking, testing... This manual process is exhausting. GEPA automates it.

The Core Idea: Treat Prompts Like Living Things

GEPA borrows ideas from biological evolution. In nature:

- Creatures are born with DNA (their "instructions")

- They live and get tested by the environment

- The ones that survive pass on their DNA

- Their children have slightly modified DNA (mutations)

- Over generations, creatures get better at surviving

GEPA does the same thing, but with prompts instead of creatures:

- A prompt is born (your initial attempt)

- It gets tested on real tasks

- Good prompts "survive" and get to create children

- Children are modified versions (mutations)

- Over iterations, prompts get better at the task

Key Terms Explained

Candidate

A "candidate" is simply one version of your prompt (or set of prompts). Think of it like a job applicant. You have many candidates applying for the job of "best prompt." Each candidate is trying to prove they're the best.

# A candidate is just a dictionary mapping names to text

candidate = {

"system_prompt": "You are a helpful math tutor...",

"format_instructions": "Show your work step by step..."

}

Mutation

In biology, mutation means a small random change to DNA. In GEPA, mutation means changing the prompt text.

But here's the clever part: GEPA doesn't make random changes. It uses an LLM to intelligently suggest improvements based on what went wrong.

Example of mutation:

BEFORE (Parent):

"Solve this math problem."

The LLM notices: "This prompt fails on word problems

because it doesn't tell the model to identify what's being asked."

AFTER (Child/Mutant):

"Read the problem carefully. Identify what is being asked.

Then solve step by step."

The child prompt is a "mutation" of the parent — similar, but improved.

Selection

You can't keep every prompt forever — you'd have thousands. Selection means choosing which prompts to keep and which to discard.

Think of it like a talent show:

- Round 1: 100 contestants

- Round 2: Keep the best 20

- Round 3: Keep the best 5

- Winner: The single best

GEPA uses selection to focus on promising prompts rather than wasting time on bad ones.

Pareto Frontier

This is a crucial concept. Let me explain with an example.

Imagine you're rating restaurants on two criteria: taste and price.

| Restaurant | Taste | Price (lower=better) |

|---|---|---|

| A | 9 | 8 (expensive) |

| B | 7 | 3 (cheap) |

| C | 6 | 7 |

| D | 8 | 4 |

Which is "best"? It depends on what you care about!

- If you want the tastiest: A

- If you want the cheapest: B

- If you want a balance: D

Restaurant C is clearly worse than D (D beats it on BOTH taste AND price). We say D "dominates" C.

The Pareto frontier is the set of options where no other option beats them on ALL criteria. Here, the frontier is {A, B, D}. These are all "good" in different ways.

In GEPA: Different prompts might excel on different types of problems. One prompt might be great at algebra but bad at word problems. Another might be the opposite. The Pareto frontier keeps BOTH because each is "best" at something.

Reflection

This is GEPA's secret weapon. Instead of blind trial-and-error, GEPA thinks about why things failed.

Imagine a student who gets a math test back:

- Bad approach: "I got 70%. I'll just study more."

- Reflective approach: "I got 70%. Let me look at what I got wrong... Ah, I keep making sign errors in negative numbers. I need to be more careful with negatives."

GEPA does the reflective approach. It looks at failed examples and asks an LLM: "What went wrong? How can we fix the prompt?"

Trajectory / Trace

A trajectory is the full record of what happened when running the prompt.

Think of it like a recipe attempt:

- Input: "Make chocolate cake"

- Step 1: Got flour ✓

- Step 2: Added sugar ✓

- Step 3: Set oven to 500°F ✗ (too hot!)

- Step 4: Cake burned

- Output: Burned cake

- Score: 2/10

The trajectory includes ALL the steps, not just the final score. This helps GEPA understand WHERE things went wrong (Step 3), not just THAT things went wrong.

Epoch

An epoch means one complete pass through all your training data.

If you have 100 training examples and process them in batches of 10:

- Batch 1: examples 1-10

- Batch 2: examples 11-20

- ...

- Batch 10: examples 91-100

- End of Epoch 1

- Shuffle the data

- Batch 1: examples 47, 3, 82... (random order now)

- ...and so on

Minibatch

Instead of testing a prompt on ALL your data at once (slow and expensive), you test on a small minibatch — maybe just 3-5 examples.

This is like a chef tasting a dish while cooking rather than waiting until the entire banquet is prepared.

Why Each Component Exists

Let me map the code components to their purpose:

| Component | Real-World Analogy | Purpose |

|---|---|---|

GEPAAdapter | Translator | Connects GEPA to YOUR specific system |

CandidateSelector | Talent scout | Picks which prompts to improve next |

ReflectiveMutationProposer | Writing coach | Suggests improvements to prompts |

BatchSampler | Test administrator | Picks which examples to test on |

ComponentSelector | Focus advisor | Decides which part of prompt to edit |

StopperProtocol | Race official | Decides when optimization is done |

EvaluationPolicy | Grading system | Decides how to score prompts |

The Full Process, Step by Step

Let me walk through what actually happens:

Step 0: You Provide a Starting Point

Your seed prompt: "Answer the question: {`{question}`}"

Your training data: 100 math problems with answers

Your metric: "Did the model get the right answer?" (0 or 1)

Step 1: Test the Starting Prompt

GEPA runs your prompt on a few examples (a minibatch):

Example 1: "What is 2+2?" → Model says "4" → Score: 1 ✓

Example 2: "If John has 3 apples..." → Model says "7" → Score: 0 ✗

Example 3: "Solve: 3x = 9" → Model says "x = 3" → Score: 1 ✓

Average score: 0.67 (2 out of 3 correct)

Step 2: Reflect on Failures

GEPA asks a smart LLM: "Here's the prompt and what happened. Why did Example 2 fail?"

The reflection LLM analyzes:

"The prompt failed on the word problem because it doesn't

instruct the model to:

1. Identify the key information

2. Set up an equation

3. Solve systematically

The model just guessed instead of reasoning through it."

Step 3: Propose a Mutation

Based on the reflection, GEPA generates a new prompt:

OLD: "Answer the question: {`{question}`}"

NEW: "Read the problem carefully. Identify the key numbers

and what is being asked. Set up your approach, then solve

step by step. Question: {`{question}`}"

Step 4: Test the New Prompt

The mutated prompt is tested:

Example 1: Score 1 ✓

Example 2: Score 1 ✓ (now works!)

Example 3: Score 1 ✓

Average score: 1.0 (3 out of 3 correct)

Step 5: Selection — Keep the Good Ones

Now GEPA has two candidates:

- Original prompt: scores 0.67

- Mutated prompt: scores 1.0

The mutated prompt is clearly better, so it becomes the new "best."

But GEPA might keep BOTH if they each excel on different types of problems (Pareto frontier).

Step 6: Repeat

Go back to Step 1 with the new best prompt(s). Keep improving until:

- You've used up your budget (max evaluations)

- The prompt is "good enough"

- No more improvement is happening

Step 7: Return the Best

After many iterations, GEPA returns the best prompt it found.

Why Is Selection Needed?

Imagine you're breeding dogs for speed. You have 100 dogs.

Without selection: You let ALL dogs breed randomly. Slow dogs have puppies too. After 10 generations, average speed hasn't improved much.

With selection: You only let the TOP 10 fastest dogs breed. After 10 generations, average speed has improved dramatically.

Selection focuses your resources on the most promising candidates. Without it, you waste time trying to improve bad prompts instead of building on good ones.

Why Is Mutation Needed?

If you only kept the best prompt and never changed it, you'd be stuck. The original prompt has fundamental limitations.

Mutation creates variation — new ideas to try. Most mutations might be worse, but occasionally one is better. Selection keeps the better ones.

It's like brainstorming:

- Generate many ideas (mutation)

- Pick the best ones (selection)

- Build on those (repeat)

Why Pareto Frontier Instead of Just "The Best"?

Simple "pick the best" has a problem: it gets stuck.

Imagine prompt A scores 90% on algebra but 50% on word problems. Prompt B scores 60% on both. By average, A (70%) beats B (60%).

But what if you evolved B and it became C, which scores 85% on both? That beats A!

If you only kept A, you'd never discover C. The Pareto frontier keeps diverse prompts alive, allowing you to discover solutions you'd miss otherwise.

Visual Summary

What Makes GEPA Special?

Most prompt optimizers try random changes or simple patterns. GEPA is different because:

- It thinks about WHY things failed (reflection), not just THAT they failed

- It keeps diverse solutions (Pareto frontier), not just the single best

- It uses language to improve language — an LLM suggests prompt improvements

GEPA outperforms GRPO by 10% on average and by up to 20%, while using up to 35x fewer rollouts. GEPA also outperforms the leading prompt optimizer, MIPROv2, by over 10% across two LLMs.