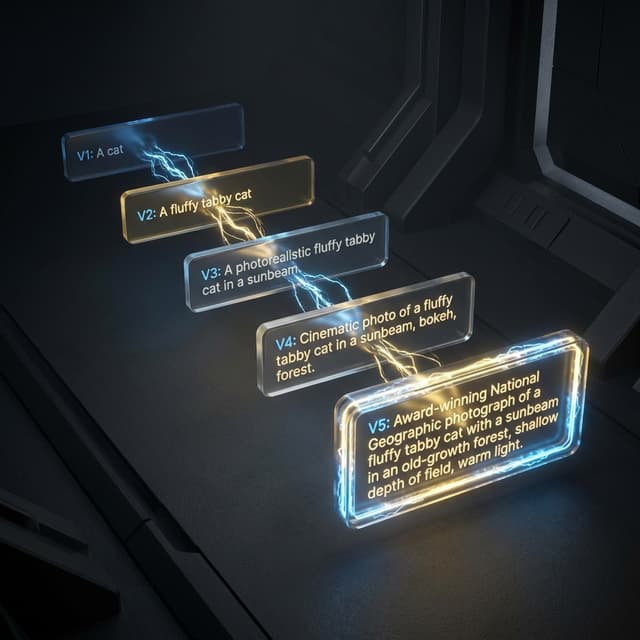

The Problem: Manual Prompt Engineering Doesn't Scale

You've written a prompt. It works... sometimes. So you tweak it. Test it. Tweak it again. Add "think step by step." Remove it. Add examples. Remove them. Try different phrasings.

After hours of this, you've maybe improved accuracy by 5%. And you have no idea if that improvement will hold on new data.

This is the prompt engineering treadmill. DSPy's optimizers are designed to get you off it.

What DSPy Optimizers Actually Do

Every DSPy optimizer answers the same question: "Given my task and some training data, what should my prompt look like?"

But they attack it from three different angles:

| Approach | What Gets Optimized | Optimizers |

|---|---|---|

| Few-shot demos | "What examples should I show?" | LabeledFewShot, BootstrapFewShot, BootstrapFewShotWithRandomSearch, KNNFewShot |

| Instructions | "How should I describe the task?" | COPRO, MIPROv2, GEPA |

| Model weights | "Can I bake this into the model itself?" | BootstrapFinetune |

Quick Reference: When to Use What

Before diving deep, here's the practical cheat sheet:

Few-Shot Demo Optimizers

- LabeledFewShot - Simply grabs k random examples from training data. No validation, no magic.

- BootstrapFewShot - The search algorithm goes through every training input in the trainset, gets a prediction and then checks whether it "passes" the metric. If it passes, the examples are added to the compiled program. It captures full traces including intermediate reasoning.

- BootstrapFewShotWithRandomSearch - This creates many candidate programs by shuffling the training set and picking the best one. It tries different numbers of bootstrapped samples and evaluates each on the validation set.

- KNNFewShot - Uses an in-memory KNN retriever to find the k nearest neighbors at test time. For each input, it identifies the most similar examples and attaches them as demonstrations.

Instruction Optimizers

- COPRO - Uses coordinate ascent (hill-climbing) to optimize instructions. It generates multiple alternative instructions, evaluates them using your metric, and iteratively selects the best-performing ones.

- MIPROv2 - Works in three stages: 1) Bootstrap few-shot examples. 2) Propose instruction candidates. 3) Find an optimized combination of both using Bayesian Optimization.

- GEPA — Reflective evolution using natural language feedback. Analyzes WHY things failed, not just THAT they failed. Use when you need sample-efficient optimization or have rich feedback signals.

Weight Optimizer

- BootstrapFinetune - A weight-optimizing variant that invokes a teacher program to produce reasoning, then uses those traces to fine-tune a student program.

1. LabeledFewShot

Optimizes: Few-shot Demonstrations Complexity: Simple Best For: Quick baseline, any data size

The Idea

LabeledFewShot is the simplest optimizer—it just grabs examples directly from your training data and prepends them to prompts.

Step-by-Step

- Initialize: Specify

k(number of examples to include in each prompt) - Random Selection: Randomly select

kexamples from yourtrainset - Format Examples: Convert selected examples into the format expected by your module's signature

- Prepend to Prompt: These examples are prepended to every prompt sent to the LM

Key Characteristics:

- No validation—examples are used as-is

- Examples are "raw" (no intermediate reasoning steps)

- Results may vary between runs due to random selection

- No bootstrapping or augmentation

optimizer = LabeledFewShot(k=8)

compiled = optimizer.compile(student=your_program, trainset=trainset)

When It Works

Your examples are already high-quality. You need a baseline fast. You're testing whether few-shot helps at all before investing in fancier optimization.

When It Fails

Your training data has noise. Some examples are misleading. Different inputs need different types of examples.

2. BootstrapFewShot

Optimizes: Few-shot Demonstrations Complexity: General Best For: ~10 examples, when you need validated demonstrations

The Idea

BootstrapFewShot uses a "teacher" program to generate high-quality demonstrations that pass a metric, then uses these to train a "student" program.

Step-by-Step

-

Setup Teacher: If no teacher is provided, the student program itself becomes the teacher (optionally compiled with LabeledFewShot first)

-

Enable Tracing: Turn on compile mode to track all internal Predict calls

-

Iterate Through Training Examples:

for each example in trainset:

if we_found_enough_bootstrapped_demos():

break

# Run teacher on example

prediction = teacher(**example.inputs())

# Capture traces of all internal module calls

predicted_traces = dspy.settings.trace

-

Validate with Metric: For each prediction, check if it passes the metric:

if metric(example, prediction, predicted_traces): # This is a valid demonstration! for predictor, inputs, outputs in predicted_traces: demo = dspy.Example(automated=True, **inputs, **outputs) compiled_program[predictor_name].demonstrations.append(demo) -

Collect Valid Demos: Only demonstrations that pass the metric are kept. This continues until

max_bootstrapped_demosvalid traces are collected. -

Combine with Labeled: The final prompt includes:

- Up to

max_labeled_demosraw examples from trainset - Up to

max_bootstrapped_demosvalidated, augmented traces

- Up to

Key Parameters:

max_bootstrapped_demos=4: Augmented traces to generatemax_labeled_demos=16: Raw demos from training setmax_rounds=1: Attempts per examplemetric: Function to validate outputs

Why "Bootstrapped" Demos Are Special: Unlike labeled demos, bootstrapped demos include intermediate reasoning (chain-of-thought, retrieved context, etc.) because they capture the full trace of what the teacher did.

3. BootstrapFewShotWithRandomSearch

Optimizes: Few-shot Demonstrations Complexity: Higher Best For: 50+ examples, when you want to find optimal demo combinations

The Idea

This optimizer runs BootstrapFewShot multiple times with different random configurations and selects the best-performing program.

###Step-by-Step:

-

Create Candidate Pool: Generate multiple candidate programs:

- Uncompiled (baseline) program

- LabeledFewShot optimized program

- BootstrapFewShot with unshuffled examples

num_candidate_programs× BootstrapFewShot with randomized/shuffled examples

-

For Each Candidate:

for candidate_config in candidate_pool: # Shuffle training set differently each time shuffled_trainset = shuffle(trainset) # Vary number of bootstrapped demos num_demos = random_choice(range(1, max_bootstrapped_demos)) # Compile with BootstrapFewShot candidate = BootstrapFewShot.compile(student, shuffled_trainset) -

Evaluate All Candidates: Run each candidate program on the validation set and compute metric scores

-

Select Best: Return the program that achieved the highest score on the validation set

Key Parameters:

num_candidate_programs=10: Number of random configurations to try- All BootstrapFewShot parameters also apply

Why Random Search Helps: Different subsets of examples work better for different tasks. Random search explores this space efficiently without expensive exhaustive search.

4. KNNFewShot

Optimizes: Few-shot Demonstrations (dynamically) Complexity: Moderate Best For: When different inputs benefit from different examples

The Idea

Instead of using fixed examples, KNNFewShot dynamically selects the most relevant examples for each input using semantic similarity.

Step-by-Step

-

Initialize Embeddings:

# Create embedder (e.g., using SentenceTransformers) embedder = Embedder(SentenceTransformer("all-MiniLM-L6-v2").encode) # Embed all training examples for example in trainset: example_vector = embedder.encode(example.inputs()) store_in_index(example_vector, example) -

At Inference Time (for each new input):

a. Convert input to vector:

query_vector = embedder.encode(new_input)

b. Find k nearest neighbors in the training set index

c. Retrieve the k most similar training examples

d. Use these as demonstrations for THIS specific query

- Dynamic Prompts: Each different input gets a customized prompt with the most relevant examples

Key Parameters:

k=3: Number of nearest neighbors to retrievetrainset: Examples to search throughvectorizer: Embedding function

When to Use:

- Your task has distinct "types" of inputs

- Different categories benefit from different examples

- One-size-fits-all demos don't work well

5. COPRO (Cooperative Prompt Optimization)

Optimizes: Instructions Complexity: Moderate Best For: Zero-shot optimization, when you need better task descriptions

How It Works

COPRO optimizes the instruction text (not examples) using coordinate ascent (hill-climbing).

Step-by-Step:

-

Initialize: Start with the current instruction for each predictor in your program

-

For Each Depth Iteration (outer loop):

for d in range(depth): temperature = init_temperature * (0.9 ** d) # Cool down over time -

Generate Candidates (inner loop for each predictor):

for each predictor in program: # Use LM to propose new instructions for i in range(breadth): new_instruction = prompt_model.generate( context = [ "Previous instructions and scores", "Propose a better instruction" ], temperature = temperature ) candidates.append(new_instruction) -

Evaluate Each Candidate:

for candidate_instruction in candidates: # Create program copy with this instruction test_program = program.copy() test_program.predictor.instruction = candidate_instruction # Evaluate on training set score = evaluate(test_program, trainset, metric) -

Select Best: Keep the instruction with the highest score

-

Coordinate Ascent: Repeat the process, using the history of tried instructions to inform new proposals

Key Parameters:

breadth=10: Number of candidate instructions per iterationdepth=3: Number of refinement iterationsinit_temperature=1.4: Controls creativity (higher = more diverse)prompt_model: LM used to generate instruction candidates

The Meta-Prompt Used:

"You are an instruction optimizer for large language models.

I will give some task instructions I've tried, along with their

corresponding validation scores. The instructions are arranged

in increasing order based on their scores, where higher scores

indicate better quality.

Your task is to propose a new instruction that will lead a good

language model to perform the task even better. Don't be afraid

to be creative."

6. MIPROv2 (Multiprompt Instruction Proposal Optimizer v2)

Optimizes: Instructions AND Demonstrations (jointly) Complexity: High Best For: 200+ examples, when you want the best possible optimization

How It Works

MIPROv2 is the comprehensive optimizer. It jointly optimizes both instructions AND demonstrations using three stages and Bayesian optimization.

Stage 1: Bootstrap Few-Shot Examples

for i in range(num_candidates):

candidate_demos = []

for example in random_sample(trainset):

output = program(**example.inputs())

if metric(example, output) > metric_threshold:

candidate_demos.append(trace)

if len(candidate_demos) >= max_bootstrapped_demos:

break

demo_sets[i] = candidate_demos

Stage 2: Propose Instruction Candidates (Grounded Proposal)

This stage is "data-aware and demonstration-aware":

#### Gather context for instruction generation

context = {

"dataset_summary": summarize_training_data(trainset),

"program_code": inspect_dspy_program(program),

"example_traces": bootstrapped_demos,

"predictor_being_optimized": predictor_name

}

#### Generate multiple instruction candidates

for i in range(num_candidates):

instruction = prompt_model.generate(

f"Given this context: {context},

write an effective instruction"

)

instruction_candidates.append(instruction)

Stage 3: Bayesian Optimization Search

for trial in range(num_trials):

combo = bayesian_optimizer.suggest({

"instruction_idx": range(num_candidates),

"demo_set_idx": range(num_demo_sets)

})

test_program = build_program(

instruction = instructions[combo.instruction_idx],

demos = demo_sets[combo.demo_set_idx]

)

# Evaluate on minibatch

score = evaluate(test_program, minibatch, metric)

bayesian_optimizer.tell(combo, score)

Key Parameters:

num_candidates=7: Instruction/demo candidates to generatenum_trials=15-50: Bayesian optimization iterationsminibatch_size=25: Examples per trial evaluationminibatch_full_eval_steps=10: How often to do full evaluationauto="light"|"medium"|"heavy": Preset configurations

Why Bayesian Optimization?

| Method | Strategy | Efficiency |

|---|---|---|

| Grid Search | Try every combination | Terrible for large spaces |

| Random Search | Try random combinations | Better, but blind |

| Bayesian | Learn from each trial, focus on promising areas | Smart |

7. GEPA (Genetic-Pareto Reflective Optimizer)

Optimizes: Instructions (with rich feedback) Complexity: Sophisticated Data needed: ~10+ examples (sample-efficient!)

The Idea

GEPA is fundamentally different from other optimizers. Instead of blind trial-and-error, it reflects on failures. It asks: "WHY did this fail? What specifically went wrong? How can we fix it?"

This is like the difference between a student who just retakes tests hoping for better luck, versus one who reviews their mistakes and learns from them.

The Core Innovation: Reflection + Pareto Frontier

GEPA maintains a Pareto frontier of candidates — prompts that each excel at something. Rather than converging on one "best" prompt, it keeps diverse specialists alive.

Each iteration:

- Sample a candidate from the Pareto frontier

- Run it on a minibatch, collect traces AND feedback

- Reflect: analyze what went wrong

- Mutate: propose an improved instruction

- Evaluate: if better, add to frontier

Step-by-Step

1. Sample from Pareto Frontier

# Candidates weighted by "coverage" - how many examples they're best on

candidate = weighted_sample(pareto_frontier)

2. Collect Traces + Feedback

for example in minibatch:

output = candidate(**example.inputs())

trace = capture_reasoning()

feedback = metric.get_feedback(example, output) # Rich text feedback!

3. Reflect on Failures

Here's the magic. GEPA sends this to a reflection LLM:

"Current instruction: [instruction]

Here's what happened:

- Example 1: FAILED. Feedback: 'Model didn't identify the variable

to solve for, just computed 7+3 instead of recognizing x+3=7'

- Example 2: FAILED. Feedback: 'Multiplied instead of dividing'

- Example 3: SUCCESS.

Analyze what went wrong. Propose an improved instruction."

4. Mutate Based on Reflection

new_instruction = reflection_lm.generate(

reflection_prompt + traces + feedback

)

5. Update Pareto Frontier

if new_candidate_is_non_dominated():

pareto_frontier.add(new_candidate)

remove_dominated_candidates()

Why GEPA Outperforms

| Aspect | Traditional Optimizers | GEPA |

|---|---|---|

| Feedback | Scalar score (0.73) | Rich text ("failed because...") |

| Search | Random or gradient-based | Reflection-guided |

| Diversity | Converge to single best | Pareto frontier of specialists |

| Efficiency | Needs many rollouts | 35× fewer rollouts than GRPO |

Results from the paper:

- Outperforms GRPO (reinforcement learning) by 10% on average, up to 20%

- Uses up to 35× fewer rollouts

- Outperforms MIPROv2 by 10%+ across benchmarks

When to Use GEPA

- You have rich feedback signals (not just pass/fail)

- Sample efficiency matters (limited budget)

- Your task has diverse subtypes (Pareto frontier helps)

- You want interpretable improvements (reflection explains changes)

Key Parameters:

auto="light"|"medium"|"heavy": Optimization intensityreflection_lm: LLM used for reflection (can differ from task LLM)reflection_minibatch_size=3: Examples per reflection roundskip_perfect_score=True: Don't reflect on successes

optimizer = dspy.GEPA(

metric=my_metric_with_feedback,

auto="medium",

reflection_lm=dspy.LM("openai/gpt-4o")

)

compiled = optimizer.compile(student=program, trainset=trainset, valset=valset)

8. BootstrapFinetune

Optimizes: Model Weights Complexity: High Data needed: 200+ examples

The Idea

BootstrapFinetune is different from all the others. Instead of changing prompts, it changes the model itself. It distills a prompt-optimized program into actual weight updates.

Step-by-Step

-

Setup Teacher/Student:

teacher_program = optimized_large_model_program # e.g., GPT-4o student_program = program.deepcopy() student_program.set_lm(small_model) # e.g., Llama-7B -

Bootstrap Training Data:

training_data = [] for example in trainset: # Run teacher program output = teacher_program(**example.inputs()) # Capture full trace (input/output for each module) traces = get_all_module_traces() # Optionally filter with metric if metric is None or metric(example, output) > threshold: training_data.append(traces) -

Create Multi-Task Dataset: Each module in the program becomes a separate "task":

finetune_dataset = [] for trace in training_data: for module_name, (input, output) in trace.items(): finetune_dataset.append({ "task": module_name, "input": input, "output": output }) -

Fine-tune the LM:

# Using standard LM fine-tuning finetuned_lm = finetune( base_model = small_model, dataset = finetune_dataset, **train_kwargs # epochs, learning_rate, etc. ) -

Update Program: Replace LM in student program with finetuned version:

student_program.set_lm(finetuned_lm) return student_program

Key Parameters:

metric: Optional filter for training tracesnum_threads: Parallel processing for bootstrappingtrain_kwargs: Fine-tuning hyperparameters (epochs, lr, etc.)

When to Use:

- You've already optimized prompts with another optimizer

- You need to run at scale (smaller models = cheaper)

- Teacher model is expensive but available for data generation

Summary Comparison Table

| Optimizer | Optimizes | Method | Data | Best For |

|---|---|---|---|---|

| LabeledFewShot | Demos | Random selection | Any | Quick baseline |

| BootstrapFewShot | Demos | Metric-filtered | ~10 | Validated demos |

| BootstrapFewShotWithRandomSearch | Demos | Random search | ~50+ | Optimal demo sets |

| KNNFewShot | Demos | Nearest neighbor | Any | Input-dependent demos |

| COPRO | Instructions | Coordinate ascent | ~50+ | Zero-shot optimization |

| MIPROv2 | Both | Bayesian optimization | ~200+ | Comprehensive optimization |

| GEPA | Instructions | Reflective evolution | ~10+ | Sample-efficient, rich feedback |

| BootstrapFinetune | Weights | Gradient descent | ~200+ | Model distillation |

Recommended Workflow

- Start Simple:

LabeledFewShotorBootstrapFewShotfor quick baseline - Add Search:

BootstrapFewShotWithRandomSearchif you have more data - Optimize Instructions:

COPROif you want zero-shot or clearer prompts - Go Comprehensive:

MIPROv2for good results with sufficient data - Reflect & Evolve:

GEPAwhen you have rich feedback signals and want sample-efficient optimization - Distill:

BootstrapFinetuneto compress into smaller, faster models

The key insight is that these optimizers can be composed—run MIPROv2 or GEPA, then pass the result to BootstrapFinetune for a highly optimized, efficient program.